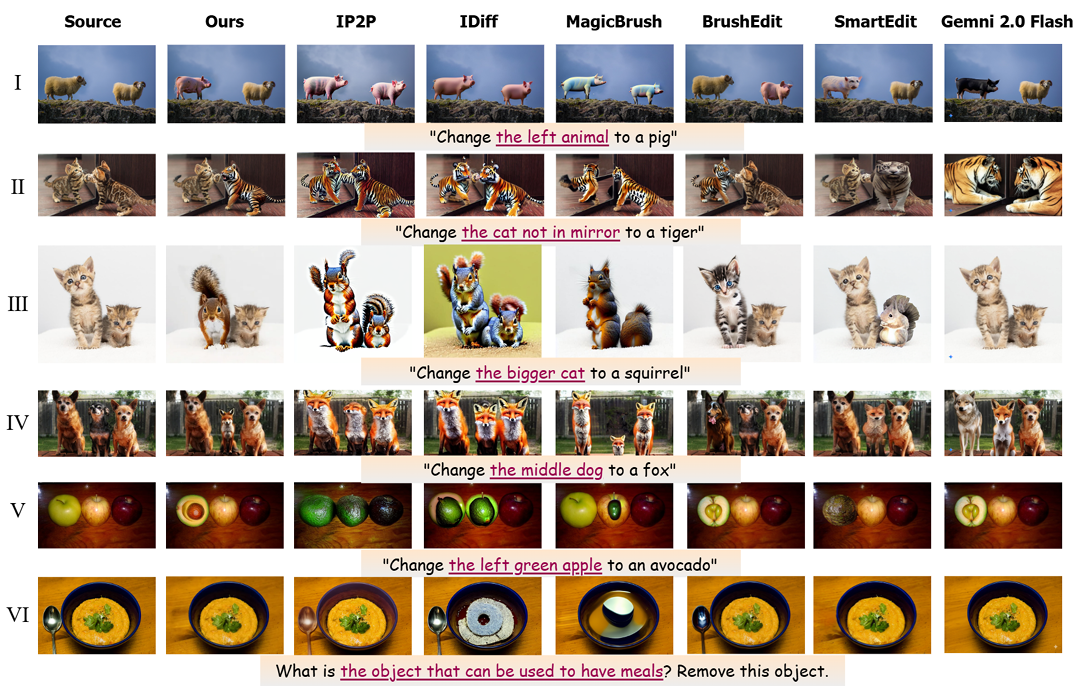

Qualitative comparisons of SmartFreeEdit on Reason-Edit with previous instruction-based image editing methods including InstructPix2Pix (IP2P), InstructDiffusion(IDiff), MagicBrush, BrushEdit, SmartEdit(13B) and latest Gemini 2.0 Flash. Mask-free methods don't require additional mask input, where we take these methods with same instructions as baselines for comparison and our approach demonstrates superior editing capabilities in complex scenarios.

Recent advancements in image editing have utilized large-scale multimodal models to enable intuitive, natural instruction-driven interactions. However, conventional methods still face significant challenges, particularly in spatial reasoning, precise region segmentation, and maintaining semantic consistency, especially in complex scenes. To overcome these challenges, we introduce SmartFreeEdit, a novel end-to-end framework that integrates a multi modal large language model (MLLM) with a hypergraph-enhanced inpainting architecture, enabling precise, mask-free image edit ing guided exclusively by natural language instructions. The key innovations of SmartFreeEdit include: (1) the introduction of region aware tokens and a mask embedding paradigm that enhance the model’s spatial understanding of complex scenes; (2) a reasoning segmentation pipeline designed to optimize the generation of editing masks based on natural language instructions; and (3) a hypergraph-augmented inpainting module that ensures the preservation of both structural integrity and semantic coherence during complex edits, overcoming the limitations of local-based image generation. Extensive experiments on the Reason-Edit benchmark demonstrate that SmartFreeEdit surpasses current state-of-the-art methods across multiple evaluation metrics, including segmentation accuracy, instruction adherence, and visual quality preservation, while addressing the issue of local information focus and improving global consistency in the edited image.

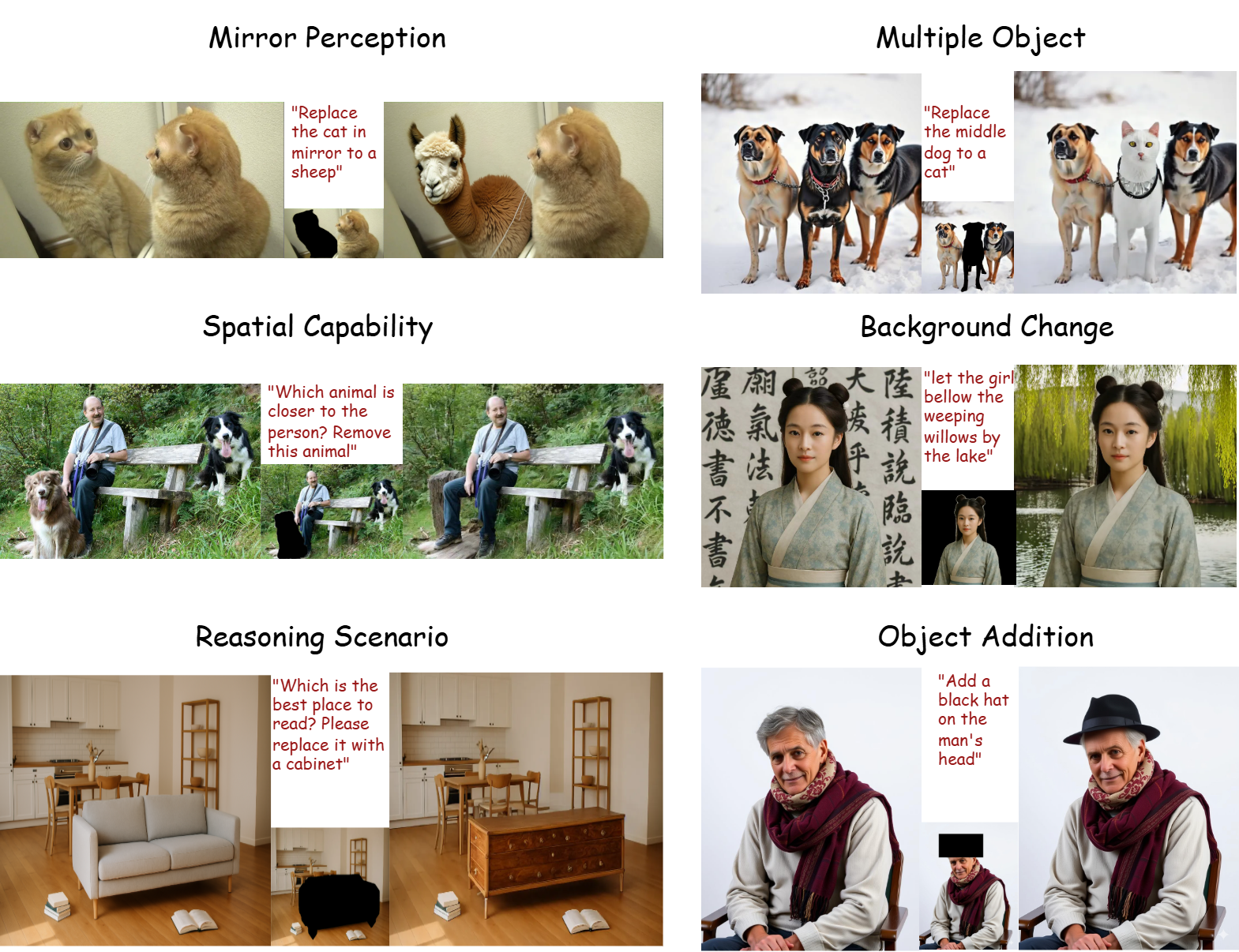

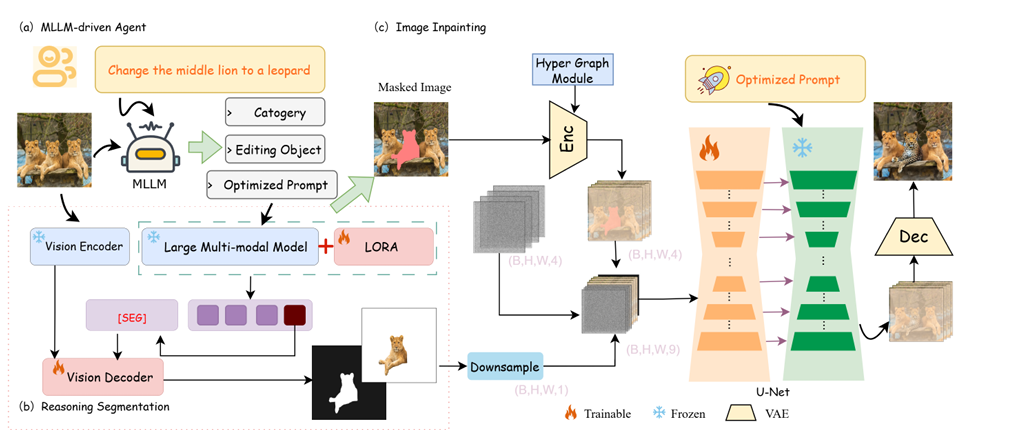

Architecture Overview of SmartFreeEdit for Reasoning Complex Scenarios Instruction-Based Editing. Our SmartFreeEdit consists of three key components: (1) An MLLM-driven Promptist that decomposes instructions into Editing Objects, Category, and Target Prompt. (2) Reasoning segmentation converts the prompt into an inference query and generates reasoning masks. (3) An Inpainting-based Image Editor using the hypergraph computation module to enhance global image structure understanding for more accurate edits.

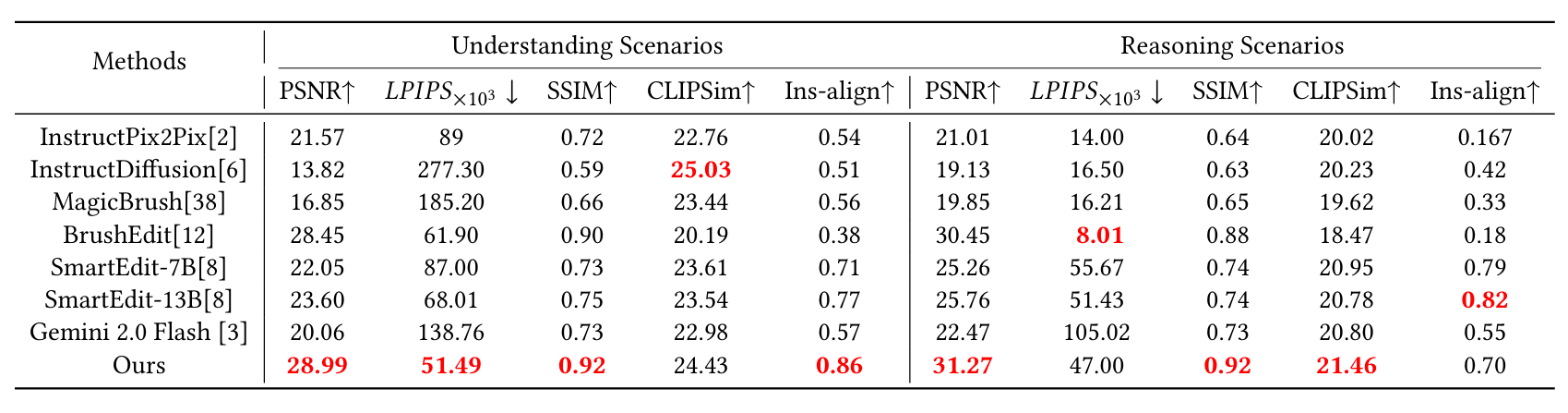

Quantitative comparison of SmartFreeEdit with existing methods on Reason-Edit.

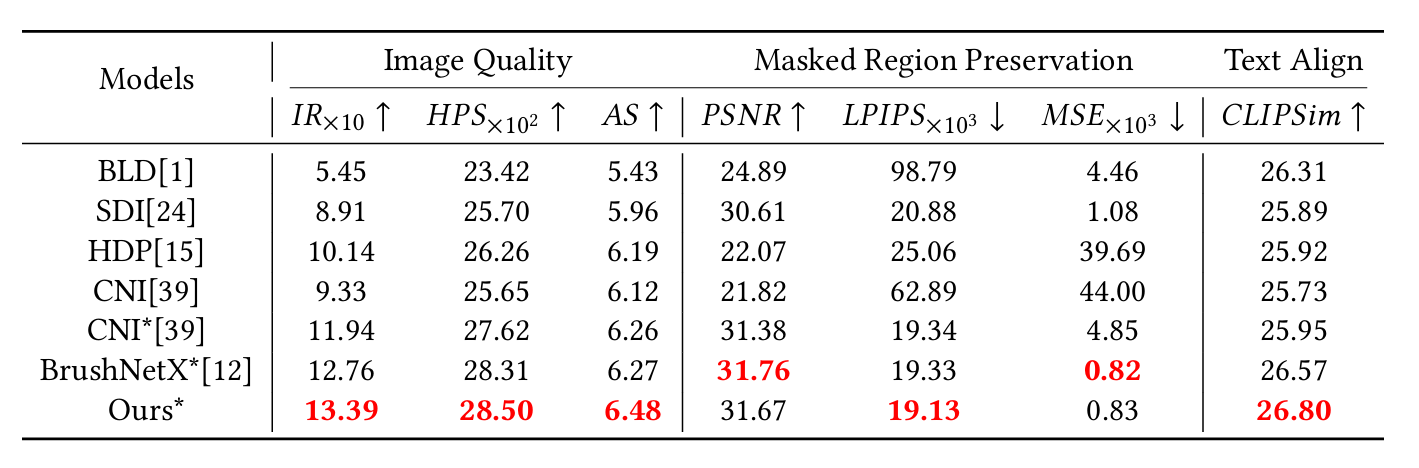

Quantitative comparison of SmartFreeEdit with existing methods on BrushBench.

If you find our work useful, please cite our paper:

@article{sun2025smartfreeedit,

title={SmartFreeEdit: Mask-Free Spatial-Aware Image Editing with Complex Instruction Understanding},

author={Sun, Qianqian and Luo, Jixiang and Zhang, Dell and Li, Xuelong},

journal={arXiv preprint arXiv:2504.12704},

year={2025}}